Socrates: If I’m correct, then some of our educators are mistaken in their view that it is possible to implant knowledge into a person that wasn’t there originally, like vision into the eyes of a blind man.

Glaukon: That’s what they say.

Socrates: What our message now signifies is that the ability and means of learning is already present in the soul.

—Plato, The Republic (4th c. BCE)

In the Allegory of the Cave, excerpted above, Plato speculates—through a dialogue between Socrates and Glaukon—on how humans perceive reality and truth. This 2400-year-old thought experiment provides a useful framework for thinking about how AI agents based on large language models (LLMs) access reality, and implications for how to build truly ‘intelligent’ AIs.

Shadows of copies of reality

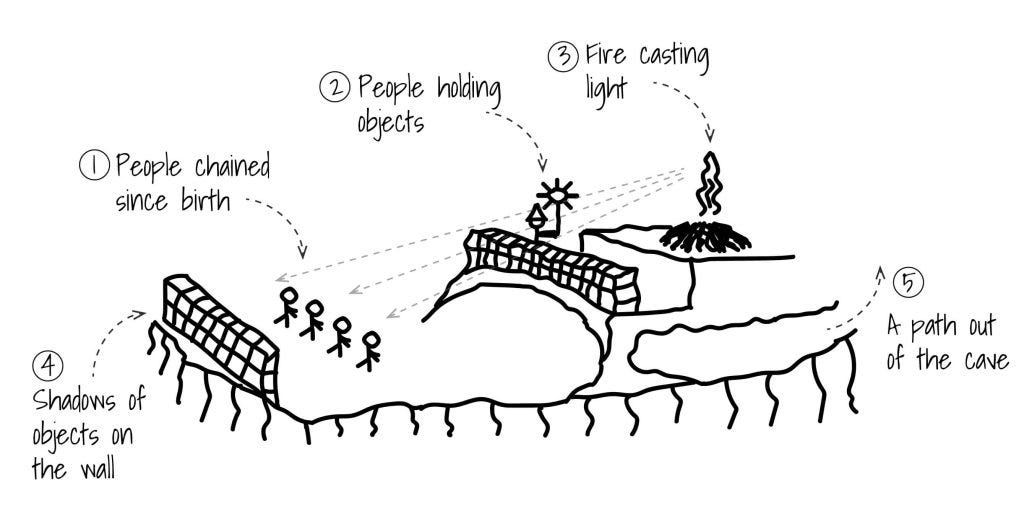

In the dialogue, Socrates imagines a group of humans captive from birth in a cave, where they may may look in only one direction at a wall, on which a fire (burning behind the captives) projects shadows cast by models of objects carried by other people (who are themselves concealed behind a wall running between the fire and the captives):1

As I learned in high-school English literature class, Plato’s allegory is meant to convey that many of us see only “a shadow of a copy” of the real ultimate forms of reality. Nevertheless, we have the ability to learn to perceive ultimate forms, and for doing so “the ability and means of learning is already present in the soul” (see above quote).

How humans experience and build on reality

To be more precise—expanding upon Plato’s allegory—human perception and sense-making of reality looks something like this:

Let’s break this down:

Reality is what actually exists as matter, energy, space and time. Because we perceive reality through our senses, we cannot know for certain what it consists of independent of those senses.2 Indeed, the role of the observer is fundamental to our core physical theories of the nature of existence, including relativity and quantum mechanics.

Experience consists of our perceptions of reality using our senses.

Memory is the part of experience that we record for later use, either actively (able to be voluntarily recalled) or passively (able to be recognized).

Symbols are what we use to represent our experienced memories of reality (and our internal thoughts) to others, often in written or spoken language. The distinction between experience/memory and symbols is an important one, recently explored in the work of Evelina Fedorenko that language is primarily a tool for communication rather than thought.

Patterns are regularities that we notice in the world. These can be recognized directly from experience/memory but—powerfully for the development of human civilization and culture—are commonly recognized from symbols communicated by others.

Interpretation consists of our explanations of experience and patterns. These explanations depend heavily on the characteristics of the explainer. For example, the flavor of explanations differs hugely between a baby, a child, the ‘common man’, a research scientist and a schizophrenic person. Interpretations may have many bases, including natural laws, abstract theories, religion and other principles. Furthermore, the action of interpreting reality may be an unconscious reaction or a conscious act.3

This framework is not universally agreed4, but I expect most readers will recognize it as a fair description of human perception and sense-making.

How AI agents experience and build on reality

AI agents have a significantly different connection to reality. This connection depends on the details of particular agents, as I explore further below. But first, let’s apply the above framework to LLM-based agents:

Reality: Like humans, LLMs have no way to determine the fundamental nature of reality.

Experience: A key difference between humans and LLM-based agents is that LLMs do not have direct experience of reality. (As discussed below, this may be very different for embodied AI agents.)

Memory: LLMs have (extensive) ‘memory’ in the sense of access to a large amount of information, but it is not memory of experience. Rather it is a mathematical distillation of symbols and patterns. Furthermore, LLM memory of interactions with any individual user are often limited to the current session (in the computational sense of ‘session’), and may even fade within a session.

Symbols: Symbols—usually in the form of ‘tokens’—are the fundamental basis of LLMs, which are trained by processing trillions of symbols/tokens. But this is very different from human use of symbols as a translation of experience/memory. For LLMs, symbols are a substitute for human experience.

Patterns: Pattern recognition and matching is at the heart of what LLMs do. LLMs are able both to incorporate patterns that have been observed by humans, and to recognize new patterns in the symbols on which they are trained. Some of the most iconic achievements of AI have been in such pattern recognition—e.g. protein folding prediction by AlphaZero or move 37 in the second game between AlphaGo and Lee Sedol.

Interpretation: This is the other key difference between humans and LLMs. Although many credit LLMs with ‘intelligence’ and ‘creativity’, we know that what LLMs are doing is serial prediction of the most likely next token (with a tunable element of randomness). That is, for an LLM, pattern recognition and interpretation are essentially the same thing.

In summary, the two key differences between human and LLM access to reality are:

Humans perceive reality through our senses, while LLMs perceive reality through training based upon symbols that represent reality. Those symbols may be created by humans or computational techniques (including other AI models).

Humans consciously or unconsciously interpret patterns and experience. LLMs engage in next-token prediction to generate outputs. While this next token prediction is hugely effective in many contexts—due to high LLM dimensionality and training on massive data sets, post-training refinements (including system prompts), and other factors—it is fundamentally different from flexible interpretation.

These are important differences: we should not expect computer models to faithfully replicate human behavior when they neither have sensory access to reality, nor react to that reality in a flexible way.

The precise implications of these differences are hard to know, because of the extreme complexity of the interface between intelligence and reality. However, a few implications are apparent:

We should not expect LLMs to develop effective world models, much less to adjust their world models in response to reality (as humans do semi-automatically).

We should not expect LLMs to be able to develop fundamentally new insights. LLMs can produce a simulacrum of insight by combing human knowledge for relevant insights and/or combining existing, related insights in new ways. The latter is arguably a component of true insight: as Newton wrote “If I have seen further it is by standing on the shoulders of Giants.” And Sam Altman would probably tell us that this can be enough as LLMs evolve. But my intuition is otherwise.

We should expect LLMs to continue regularly to engage in wildly inappropriate behavior in their interactions with humans. While it may be trivial for LLMs to deliver predictable responses in the vast majority of circumstances, their lack of direct connection to reality means that in edge cases, all bets are off (and will stay that way even as LLM scale increases).

Beyond these predictions, it seems obvious that these difference between human and LLM access to reality are a big part of why the behavior of LLMs is unpredictable from a human perspective.

However, from a technological standpoint, neither of these differences of LLMs is immutable. As we continue the journey of developing and building increasingly capable AI, it is entirely possible to give AI agents more human-like access to reality.

Giving AI agents a human connection to reality

The first key difference above that LLMs do not directly perceive reality is actively being addressed. AI agents can be ‘embodied’—i.e. connected to sensors that perceive reality. This will happen increasingly with the development of humanoid robots like Figure and Tesla’s Optimus. While robotic senses remain inferior to human senses in many respects (but not all, such as visual and aural sensitivity), there is extensive work in areas like vision, hearing and touch that will improve these capabilities significantly over time. For example, Meta has just announced its Aria Gen 2 glasses, a research project “that combines the latest advancements in computer vision, machine learning, and sensor technology”.

Short of embodiment, AIs can have a nearer connection to reality by connecting to real-world resources via the Internet and other digital networks. For a taste of what this might bring, I recommend the story of a bot that last year generated hundred of millions of dollars in digital value through connection to an X account and a crypto trading account (funded with $50,000 in Bitcoin by Marc Andreesen).

The second key human/LLM difference, between interpretation and next-token prediction, is almost as susceptible to change. This may not seem obvious, because of the current hype-driven obsession with LLMs (and their progeny, like large reasoning models) as the path forward for AI. In reality, next-token prediction is just a contemporary eddy in the evolution of AI.

Until ChatGPT took the world by storm in late 2022, the AI research environment was significantly more diverse, using a wide variety of model architectures to derive predictions and conclusions from input data. And fortunately such research continues. For example, Meta recently introduced V-JEPA 2, which it advertises as “the next step towards our vision for AI that leverages a world model to understand physical reality, anticipate outcomes, and plan efficient strategies—all with minimal supervision”. Work on V-JEPA 2 is led by AI pioneer Yann LeCun, who has described LLMs as “an off ramp on the road to human-level AI”. Gary Marcus has been making the same point for years, recently suggesting:

Our best hope lies … [in] building alternatives to LLMs that approximate their power but within more tractable, transparent frameworks that are more amenable to human instruction.

A future of connected AI agents

The results of giving an AI agents a more human-like connection to reality are extremely hard to predict, for the same reasons noted above of complexity of the intelligence/reality interface. Returning to Plato—from the viewpoint of the early 21st century—there appears to be a through line in thinking from the Allegory of Cave (which remains highly relevant) to modern culture. But almost all of modernity was unpredictable (and highly contingent) from the viewpoint of Plato’s time. The path that AI will take into the future is similarly unpredictable.

Living in this time of technological progress and uncertainty can be exhilarating, at least for some (including me). It can also be frightening, and some have warned that connected super-intelligences pose an unacceptable risk, including the possibility of human extinction. While such risks deserve careful consideration (as I have written), I tend towards belief that we will find an acceptable pathway forward in which the benefits of AI exceed its harms.

In any event the reality is that there is “zero chance of slowing down the AI race” (as Gary Marcus opines in the post quoted above). We certainly face a future of connected AI agents, both purely computational and embodied. In this context, the best path forward—as I have recently written with Steve Cadigan—seems to be one of collective effort toward responsible AI [link Steve Cadigan blog]. An important part of this effort will involve navigating the evolving connection between AI agents and reality.

The below image of the Allegory of the Cave is from Ivaylo Durmonski, “Allegory of The Cave Examples In Real Life” (Sept. 18, 2024).

Philosophical approaches related to solipsism assert that only’s one’s own mind is certain to exist. I personally believe that some external reality does definitively exist, but I of course cannot prove it.

The interaction of consciousness and reality is a complex and highly contested topic, far beyond the scope of this blog. The deeply problematic book Mind and Cosmos by Thomas Nagel is one interesting exploration of possible approaches to this topic.

The framework was articulated in conversation with Katharine Scarfe Beckett, to whom I offer thanks.

Thanks, David! It is indeed challenging to provide a technically-grounded explanation of AI that is easily understandable to most people. This is a big part of why there is so much misinformation about. I am having a lot of fun trying to be balanced and informative about AI, while saying things different than the typical "AI is good" vs "AI is dangerous" debate.

Thanks Maury for another captivating read. When you go deeper, the future of AI is fascinating. I don't have a scientific mind and so much of the discussion around AI is difficult to follow. Your relating it to the human experience offers clarity and, as you say, we must have confidence in our ability to marshall AI so that it continues to evolve in ways that benefit mankind.